Feedback Illuminates the Rules

An excerpt from Dan Saffer’s book, Microinteractions: Designing with Details.

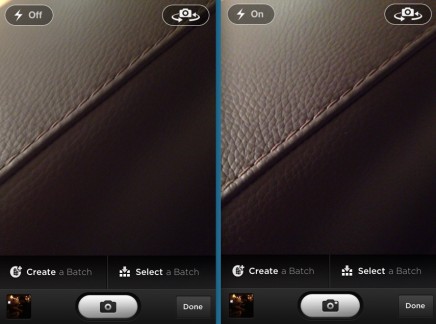

Unlike slot machines, which are designed to deliberately obscure the rules, with microinteractions the true purpose of feedback is to help users understand how the rules of the microinteraction work. If a user pushes a button, something should happen that indicates two things: that the button has been pushed, and what has happened as a result of that button being pushed (Figure 4-2). Slot machines will certainly tell you the first half (that the lever was pulled), just not the second half (what is happening behind the scenes) because if they did, people probably wouldn’t play-or at least not as much. But since feedback doesn’t have to tell users how the microinteraction actually works-what the rules actually are-the feedback should be just enough for users to make a working mental model of the microinteraction. Along with the affordances of the trigger, feedback should let users know what they can and cannot do with the microinteraction.

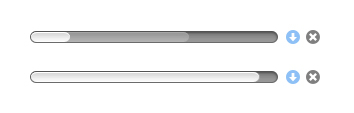

One caveat: you can have legitimate, nondeceitful reasons for not wanting users to know how the rules work; for example, users may not need to know every time a sensor is triggered or every time the device goes out to fetch data, only if something significant changes. For example, you don’t often need to know when there is no new email message, only when there is a new one. The first principle of feedback for microinteractions is to not overburden users with feedback. Ask: what is the least amount of feedback that can be delivered to convey what is going on (Figure 4-3)?

Feedback should be driven by need: what does the user need to know and when (how often)? Then it is up to the designer to determine what format that feedback should take: visual, audible, or haptic, or some combination thereof (see Figures 4-4 and 4-5).

Feedback should occur:

Immediately after a manual trigger or following/during a manual adjustment of a rule. All user-initiated actions should be accompanied by a system acknowledgment (see Figure 4-6). Pushing a button should indicate what happened.

On any system-initiated triggers in which the state of the microinteraction (or the surrounding feature) has changed significantly. The significance will vary by context and will have to be determined on a case-by-case basis by the designer. Some microinteractions will (and should) run in the background. An example is an email client checking to see if there are new messages. Users might not need to know every time it checks, but will want to know when there are new messages.

Whenever a user reaches the edge (or beyond) of a rule. This would be the case of an error about to occur. Ideally, this state would never occur, but it’s sometimes necessary, such as when a user enters a wrong value (e.g., a password) into a field. Another example is reaching the bottom of a scrolling list when there are no more items to display.

Whenever the system cannot execute a command. For instance, if the microinteraction cannot send a message because the device is offline. One caveat to this is that multiple attempts to execute the command could occur before the feedback that something is amiss. It might take several tries to connect to a network, for example, and knowing this, you might wait to show an error message until after several attempts have been made.

Showing progress on any critical process, particularly if that process will take a long time. If your microinteraction is about uploading or downloading, for example, it would be appropriate to estimate duration of the process (see Figure 4-7).

Feedback could occur:

At the beginning or end of a process. For example, after an item has finished downloading.

At the beginning or end of a mode or when switching between modes (Figure 4-8).

Always look for moments where the feedback can demystify what the microinteraction is doing; without feedback, the user will never understand the rules.

Feedback Is for Humans

While there is certainly machine-to-machine feedback, the feedback we’re most concerned with is communicating to the human beings using the product. For microinteractions, that message is usually one of the following:

Something has happened

You did something

A process has started

A process has ended

A process is ongoing

You can’t do that

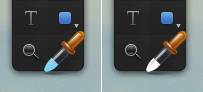

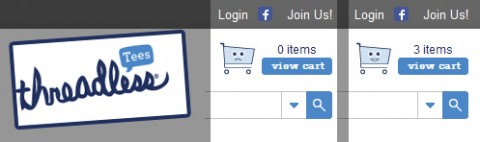

Once you know what message you want to send, the only decisions remaining are how these messages manifest, as in Figures 4-9 through 4-11. The kind of feedback you can provide depends entirely upon the type of hardware the microinteraction is on. On a mobile phone, you might have visual, audible, and haptic feedback possible. On a piece of consumer electronics, feedback could only be visual, in the form of LEDs.

Let’s take a microinteraction appliance like a dishwasher as an example. The dishwasher process goes something like this: a user selects a setting, turns the dishwasher on, the dishwasher washes the dishes and stops. If someone opens the dishwasher midprocess, it complains. Now, if the dishwasher has a screen, each of these actions could be accompanied by a message on the screen (“Washing Dishes. 20 minutes until complete.”). If there is no screen, there might be only LEDs and sounds to convey these messages. One option might be that an LED blinks while the dishwasher is running, and a chime sounds when the washing cycle is completed.

Text (written) feedback is not always an option (for example, if there is no screen or simply no screen real estate). Once we move past actual words-and let’s not forget that a substantial portion of the planet’s population is illiterate: 793 million adults, according to the Central Intelligence Agency-we have to convey messages via other means: sound, iconography, images, light, and haptics. Since they are not text (and even words can be vague and slippery), they can be open to interpretation. What does that blinking LED mean? When the icon changes color, what is it trying to convey? Some feedback is clearly learned over time: when that icon lights up and I click it, I see there is a new message. The “penalty” for clicking (or acting on) any feedback that might be misinterpreted should be none. If I can’t guess that the blinking LED means the dishwasher is in use, opening the dishwasher shouldn’t spray me with scalding hot water. In fact, neurologically, errors improve performance; how humans learn is when our expectation doesn’t match the outcome.

The second principle of feedback is that the best feedback is never arbitrary: it always exists to convey a message that helps users, and there is a deep connection between the action causing the feedback and the feedback itself. Pressing a button to turn on a device and hearing a beep is practically meaningless, as there is no relationship between the trigger (pressing the button) or the resulting action (the device turning on) and the resulting sound. It would be much better to either have a click (the sound of a button being pushed) or some visual/sound cue of the device powering up, such as a note that increases in pitch. Arbitrary feedback makes it harder to connect actions to results, and thus harder for users to understand what is happening. The best microinteractions couple the trigger to the rule to the feedback, so that each feels like a “natural” extension of the other.

UX Leadership and Influence program

(Formerly called the How to Win Stakeholders & Influence Decisions program.)

Our 16-Week program guides you step-by-step in selling your toughest stakeholders on the value

of UX

research and design.

Win over the hardest of the hard-to-convince stakeholders in your organization. Get teams to

adopt a

user-centered approach. Gain traction by doing your best UX work.

Join us to influence meaningful improvements in your organization’s products and services.

Learn more about our UX Leadership and Influence program today!