New Technologies to Consider for Interaction

The following is an except from Chris Noessel’s new book, Designing Agentive Technology, published by Rosenfeld Media.

One of the fun things we get to consider when dealing with artificial intelligence is that in order to enable it to carry out its seeing, thinking, and doing duties, we must include cutting-edge technologies in the system. Trying to list these authoritatively is something of a fool’s errand, because by the time the book is published, some will have already fallen out of use or become unremarkable, and there will be some new ones to consider. Also, I wouldn’t pretend to have collected a complete list. But by understanding them in terms of seeing, thinking, and doing, we can more quickly understand their purpose for an agent, and thereby the user. We can also begin to think in terms of these building blocks when designing agentive technologies—to have them in our backpack. We can also have a frame for contextualizing future technologies as they become available. So, at the risk of providing too cursory of lists, I’ve built the following based on existing APIs, notably IBM’s Watson and a bit of Microsoft’s Cognitive Services.

Seeing

The agent needs to be able to sense everything it needs in order to perform its job at least as well as the user, and in many cases, in ways the user can’t sense. While many of these sensing technologies seem simple and unremarkable for a human, teaching a computer to do these things is a remarkable achievement in and of itself, and very useful to equip agents to do their jobs.

- Object recognition: Enables a computer to identify objects present in an image or video feed.

- Face recognition: Helps a computer identify a person with computer recognition of their facial features.

- Biometrics: Helps identify a person through physical metrics. There are dozens of biometric technologies including fingerprints, voice prints, and even the unique pattern of capillaries in the eyes or just beneath the surface of the face. Biometrics can assist other higher-order algorithms, such as heart rate, helping convey stress in affective computing.

- Gaze monitoring: Helps the computer determine where the people around it are looking, and inferring intention, context, and even pragmatic meaning from it.

- Natural language processing: Allows the user to give instructions or ask questions of a computer in everyday language. The algorithm can also identify keywords, unique phrases, and high-level concepts in a given text.

- Voice recognition: Computer parses the messy sounds of human speech into language.

- Handwriting recognition: User inputs data and instructions through handwritten text.

- Sentiment: Computer determines the pragmatic sense of a text; whether it is positive or negative, or even whether the speaker is being ironic.

- Gesture recognition: Computer interprets the meanings conveyed through body positions and motions.

- Activity recognition: Computer infers what activities a person is engaging in, and it changes modes to accommodate the different activities. A simple example is helping computers understand that people need to sleep, and recognizing when this is happening and know that its behavior should change during this time.

- Affect recognition: Computer infers a user’s emotional state from a variety of inputs such as the tone of their voice, their gestures, or their facial expressions.

- Personality insights: Simply moving through a connected world and participating in social media, we tell a lot about ourselves and our opinions, interests, and problems. If a user gives an agent permission to access these digital trails, much of the user’s goals, personality and frustrations can be inferred, saving them the trouble of having to tell the agent explicitly.

The Coming Flood of Inferences

Much of this list of sensing technologies feels intuitive and what a human might call “direct.” For instance, you can observe a transcript of what I just told my phone, and point to the keywords by which it understood that I wanted it to set a 9-minute timer. In fact, it’s not direct at all; it’s a horribly complicated ordeal to get to that transcript, but it feels so easy to us that we think of it as direct.

But there is vastly more data that can be inferred from direct data. For instance, most people balk at the notion that the government has access to actual recordings of their telephone conversations, but much less so about their phone’s metadata, that is, the numbers that were called, what order they were called, and how long the conversations were.

Yet in 2016, John C. Mitchell and Jonathan Mayer of Stanford University published a study titled “Evaluating the privacy properties of telephone metadata.” In it they wrote that narrow AI software that analyzed test subjects’ phone metadata, and using some smart heuristics, was able to determine some deeply personal things about them, such as that one was likely suffering from cardiac arrhythmia, and that another owned a semi-automatic rifle. The personal can be inferred from the impersonal.

Similarly, it’s fairly common practice for web pages to watch what you’re doing, where you’ve come from, and what it knows you’ve done in the past to break users into demographic and psychographic segments. If you’ve liked a company in the past and visit their site straight from an advertising link that’s gone viral, you’re in a different bucket than the person who goes to their page after having gone to Consumer Reports, and the site adjusts itself accordingly.

These two examples show that in addition to whatever data we could get from direct sensing technologies, we can expect much, much more data from inference engines.

Thinking

Though largely the domain of artificial intelligence engineering, it’s interesting to know what goes into the sophisticated processing of artificial intelligence. To a lesser extent, these can inform design of these systems, although collaboration with developers actually working on the agentive system is the best way to understand real-world capabilities and constraints.

- Domain expertise: Grants an agent an ontological model of the domain in which it acts. This can be as simple as awareness of the calendar or a fixed pattern for sweeping a floor, or as complex as thermodynamics.

- Common-sense engines: Encode a body of knowledge that most people would regard as readily apparent about the world, such as that “a rose is a plant” and that “all plants need water to live.” Although people consider such things unremarkable, computers must be taught them explicitly.

- Reasoners/inference engines: Make use of common-sense engines, the semantic web, and natural language parsing to make inferences about the world such as “a rose needs water to live” from the givens above.

- Predictive algorithms: Allow a computer to predict within a range of confidence variable outcomes based on a set of givens, and act according to its confidence in a particular outcome happening.

- Machine learning: Enables a computer to identify patterns in data, as well as improve its performance of a task to be more effective toward a goal.

- Trade-off analytics: Can make a recommendation for users balancing multiple objectives, even with many factors.

- Prediction: By comparing individual cases against past examples, algorithms can predict what will likely happen next. This can be as small as the next letters in an incomplete word, next words in an incomplete sentence, or as large as what a user is likely to do or take interest in next.

Doing

- Screens: Let agents convey graphic information.

- Messages: Let agents convey textual information, often to a user’s mobile devices.

- Sound: Lets agents convey information&nbps;audibly.

- Speech synthesis: Lets agents generate human-sounding speech for conversational output.

- Haptic actuators: Let agents generate touch sensations. The vibrator in your smartphone is one example, and the rumble pack in video game console controllers is another.

- Robotics: Let agents control a physical device precisely. This can be as “simple” as the appliances in a home to something as complicated as car-manufacturing robot arms on a factory floor, or something as nuanced as conveying information and emotion through expression.

- Drones: Let agents control a mobile robot, whether swimming, driving, flying, or propelling through space.

- APIs: Allow computers to interact with other computer systems and other agents, passing information, requests, or responses back and forth, including across the world via the internet, and across the room through short distance wireless.

Handling a Range of Complexity

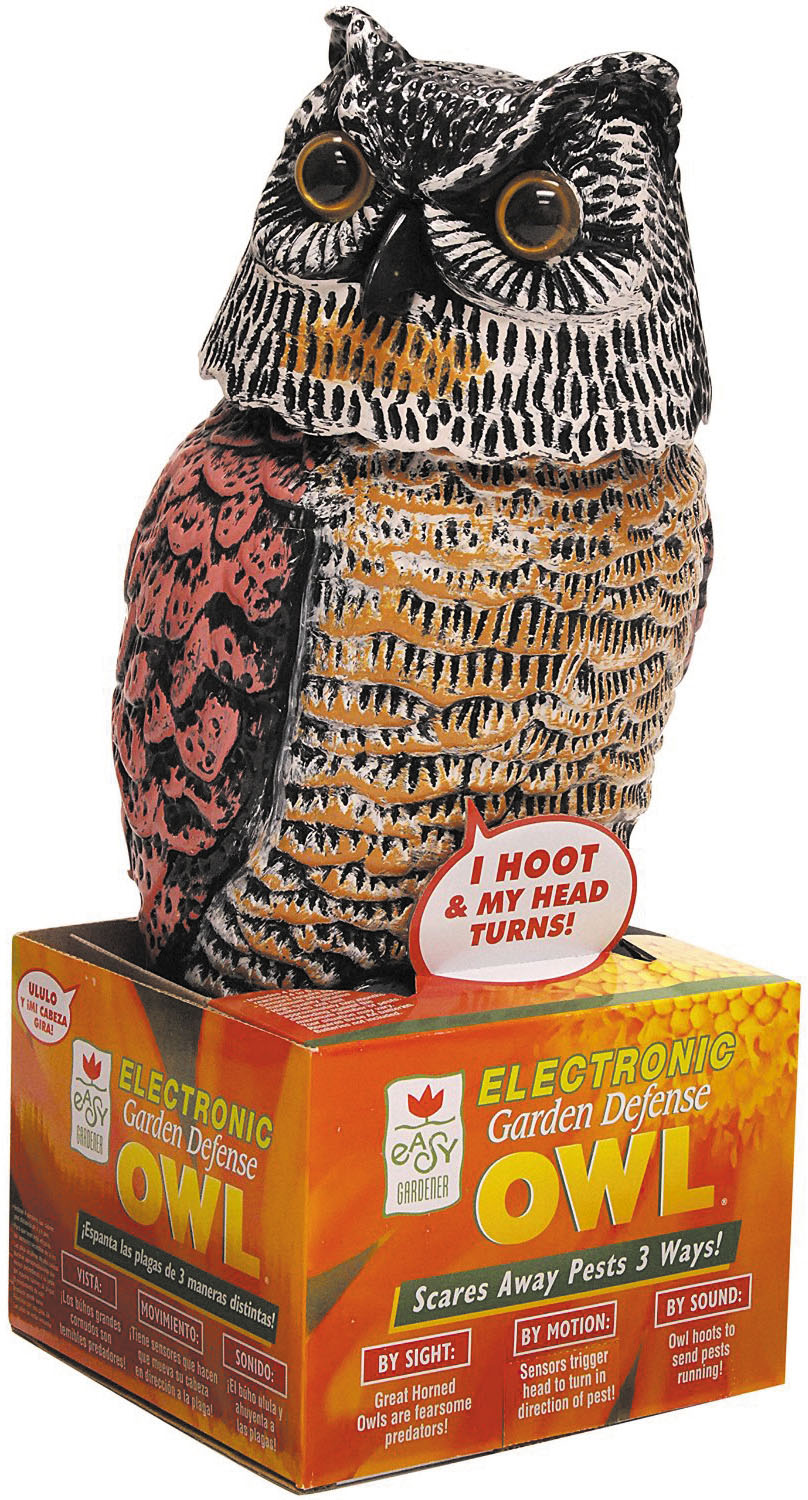

We’re about to look at a collection of use cases to consider when designing agentive technology in the next chapters. Please note that I tried to be comprehensive, which means there are a lot. But your agent may only need a few, or not even any. Consider one of my favorite examples, the Garden Defense Electronic Owl. It has a switch to turn it on, and thereafter it turns its scary owl face toward detected motion and hoots. That’s all it does, and all it needs to do. If you’re building something that simple, you won’t need to study setup patterns or worry how it might hand its responsibilities off to a human collaborator.

Simpler agents may involve a handful of these patterns, and highly sophisticated, mission-critical agents may involve all of these and more. It is up to you to understand which one of these use cases applies to your particular agent.

UX Leadership and Influence program

(Formerly called the How to Win Stakeholders & Influence Decisions program.)

Our 16-Week program guides you step-by-step in selling your toughest stakeholders on the value

of UX

research and design.

Win over the hardest of the hard-to-convince stakeholders in your organization. Get teams to

adopt a

user-centered approach. Gain traction by doing your best UX work.

Join us to influence meaningful improvements in your organization’s products and services.

Learn more about our UX Leadership and Influence program today!